No more AI movie trailers, please

Fake movie trailers have been the prevalent genre of AI-generated films. We're ready for the feature presentation.

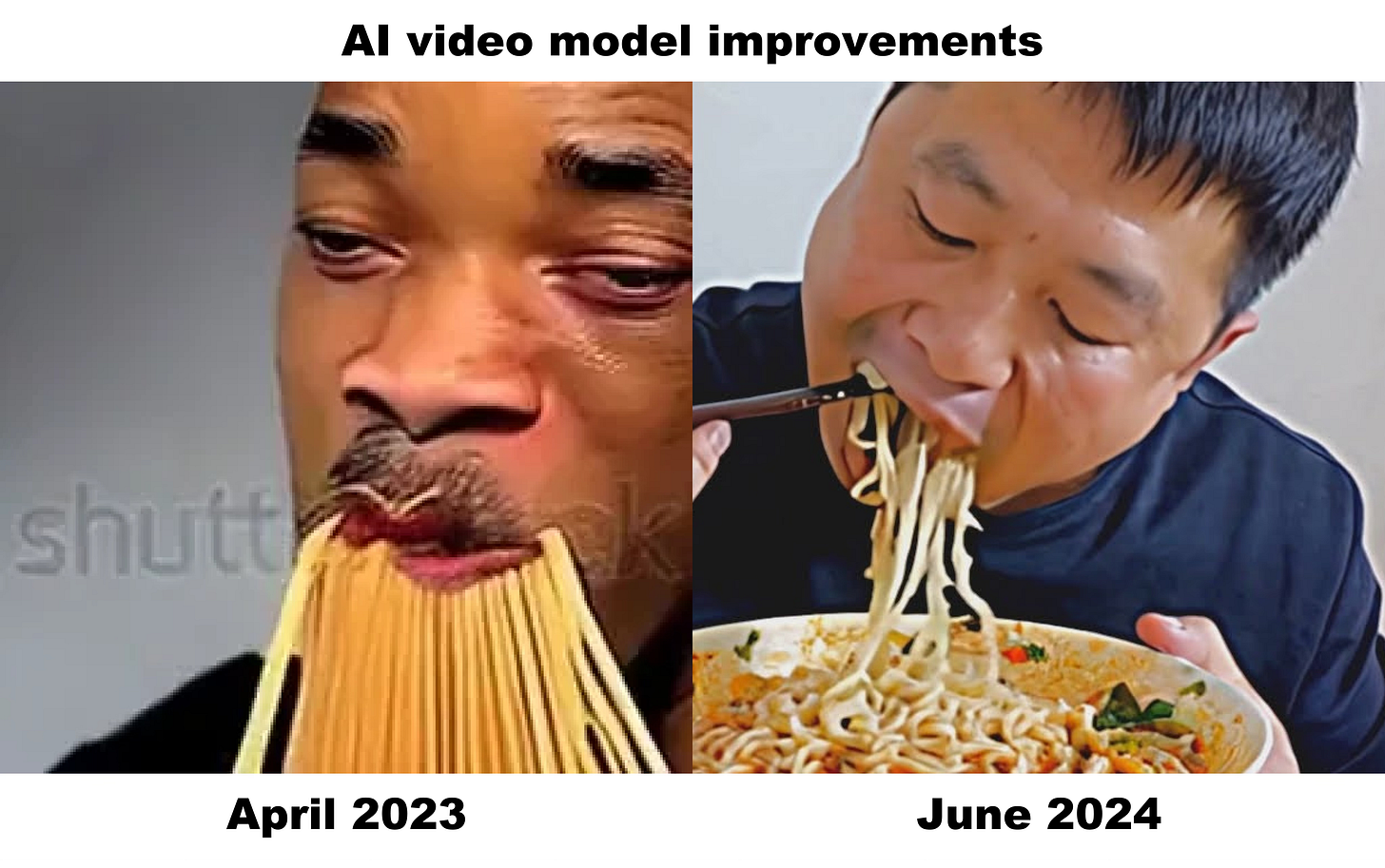

AI video technology is passing benchmark after benchmark. It’s achieved a level of image quality few thought was possible this soon. I scroll by AI-generated videos everyday that would have dumbfounded top research labs only two years ago. Technological capability is moving at an astonishing rate.

At the same time, the experience of using the technology is still deeply frustrating. The video models don’t follow your instructions. The outputs rarely convey your intention. And many ideas simply cannot be executed.

Casual viewers of AI-generated media are spared the degree to which these images betray the intentions behind them. They do, however, recognize that AI videos are not compelling as stories. These videos are mostly entertaining as technical demonstrations that let viewers marvel, Wow, AI can do that! And that too!?

This is the odd situation AI video occupies today. It routinely astonishes and disproves even the biggest skeptics. But it fails to entertain normal viewers.

The shortcoming is due to the technology’s inability to do basic stuff that’s fundamental to visual storytelling. Most AI video creators cope by sticking to friendly formats that avoid the limitations of the models— trailers, commercials, and anything that is a narrated list of images. These choices are fair workarounds. It is, after all, the early days of this strange medium. Other filmmakers are opting for a second choice— using the limitations as a deliberate aesthetic.

For the last 18 months, we’ve seen too much of the first category. And are just recently seeing the second.

In this piece I’ll talk about:

The limits of AI video models

How these limits lead filmmakers to rely on certain formats

Why those formats are boring

The more interesting alternative

And a hopeful nod for the rest of 2024.

Limitations of video models

Generative AI’s promise to translate ideas into video is, to put it mildly, exciting. Enthusiastic artists sit down teeming with concepts. They prompt, and generate, and re-prompt, but eventually realize most of their ideas are not quite achievable. At least not on their terms.

They keep generating, and iterating, and end up with a pile of very cool images united by some common theme. Sometimes it’s a look, like 1950s Panavision. Other times it’s a concept, like Shrek as a mafioso.

But it’s not really a cohesive story. It’s a litany of related images that is missing a lot of key shots to hold it together as a story.

This situation is a common one, caused by common limitations in AI video models.

What are these limitations?

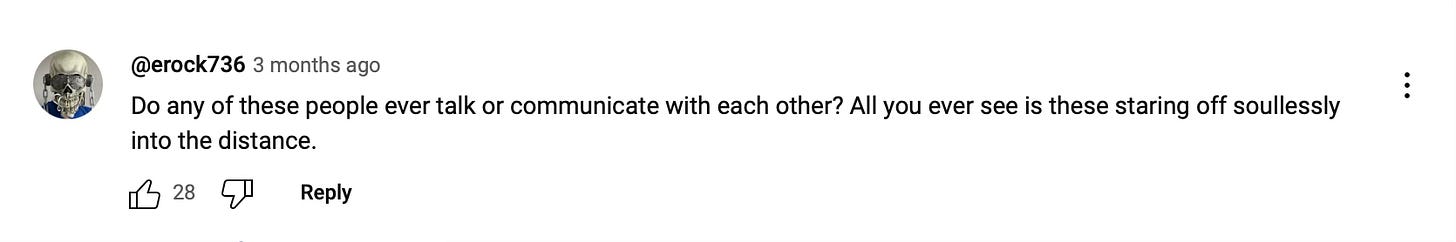

Bad Lip Syncing: It’s hard to show people talking convincingly.

Character Consistency: It’s hard to keep a character consistent, especially across different angles

Multiple characters: It’s hard to relate two characters in the same frame, especially if they’re doing different things.

Movement: Characters/objects lose consistency and defy physics when they move.

Camera movement: It’s hard to direct camera movement

Choreography: It’s hard to direct object movement.

Morphing: Weird stuff happens all the time

In short, artists lack control. These problems aren’t unsolvable with the proper workflows, but they are enormously annoying. So what do artists do with this firehose of images?

They fix it in Post. A place where they do have control. Bad lip syncing? Use voiceover Bad consistency across angles? Stick to one angle. Hard to direct movement? Use still shots with minimal movement.

These workarounds naturally lead to editing lists of related images unified by voiceover, a formula that’s great for commercials, music videos, and, of course, fake movie trailers.

The shortest road to content

Let’s return to the enthusiastic artist generating images. After a few hours, they have several hundred images along the style of their choice— say, Star Wars with a 1950s Panavision style.

They want to share their work in a way that’s recognizable as ‘content’. But the images won’t fit into the shape of a story. So they string the images together with voiceover and get something like this…

This example is not remarkable. There are thousands more like it. Just a few include:

And here’s a dozen more.

AI video creators choose the movie trailer format over and over. Most of them crib existing intellectual property. But there’s also original ideas.

The reason people gravitate to the movie trailer format is simple. It lets them show off ‘just the cool stuff’ in a recognizable format that hides shortcomings.

The above trailer is, indeed, cool. But it’s also not much beyond cool.

Current video models are not ready for narrative filmmaking. They can generate cool images, but not with enough control to tell stories the way audiences expect. Our enthusiastic artist still wants to make content with their generated images though. After all, isn’t the enthusiastic artist, after 8 hours of generating images, entitled to a piece of a content? In the age of the content-ization of all pursuits, the answer is emphatically yes. And fake movie trailers are often the shortest path to shareable content.

Fake movie trailers are not a bad thing. And I pass no judgment on the enthusiasm that leads to them. The format succeeds as an easy on-ramp into the space. The issue is fake movie trailers stink as stories. Or anything beyond demonstrations of what’s possible.

For the moment, this pairing is one of practicality. It’s allowed a lot more AI videos to be seen. Fake trailers are easy to watch, understand, and explain. This channel of only fake movie trailers has over 30 million views.

So the marriage makes sense. But we can still hope for a more suitable match.

A more suitable match

The solution to making better films is rarely better technology. More often it’s better direction, writing, and creative choices. AI video is no exception. And increasingly, some filmmakers are embracing the imperfections of AI video models in deliberate ways.

Two filmmakers are great examples of this: Abel Art and Damon Packard. They both have modest YouTube channels and quietly make some of the most compelling AI films out there.

Abel is aware of all the common problems in video models: bad lip syncing, warping images, stiff movements. But he masks these problems in ways that make them look like deliberate aesthetic choices. Here’s a 30-second sequence from “The Prometheus Expedition”, a film framed as a black and white German-language documentary.

Abel knows how to choose technical aesthetics from film history to cover up the problems of generative video. For example, the professor’s dubbing hides bad lip syncing. We hear the ‘original German’ for a moment before hearing the crudely dubbed English. Older documentaries often have the same jarring dubs, so it plays as a legitimate style that we’re accustomed to as media viewers. Soft focus b-roll of the doctor disguises low-resolution as a contemplative docu-style. And of course, the entire film has a grainy, archival aesthetic that mimics a real documentary as well as hides imperfections. Abel’s other films (like The Dinner & Le Voyageur) are also quite remarkable.

Then there’s Damon Packard. Packard is a well-known underground film director who’s made “hard to categorize, totally independent films since the 1980”1. He’s a filmmaker with a long streak of unfettered eccentricity. And now with generative AI Packard makes… well, it’s hard to say what he makes. But they’re definitely not fake movie trailers. By my estimation, he is making whatever he wants.

Packard loves to mix AI-generated clips with real archival footage. At first, it’s clear what’s real and what’s not. As he intercuts the two, you lose track of what’s what. The films are, I’d argue, weirdly watchable even if you didn’t know it was AI-generated. High praise for generative video.

In addition to mash-ups, Packard also does fully generative videos with little context and no plot, just atmosphere. He’ll do a 3-minute shootout. Or a film about a 7-11 Parking lot overrun with homeless people.

I find these films remarkable because they are not concerned with flawless photorealism, or avoiding warping, or hiding AI video’s weaknesses. They cultivate a Cinema du Weird out of the model’s habits. The strange AI-isms are, in some ways, the point.

Here’s a film about Eddie Murphy seeing his own movie in a Palisades theater filled with poodle moms. It is exactly what it sounds like.

Packard’s videos are exciting to me because they don’t mimic existing formats. They are weird things that feel alive. I know a lot of AI filmmakers. Most of the ones in Los Angeles, at least. I can safely say that Damon Packard is chasing something quite different than everyone else.

To me, films like these are a sign that the nascent space is changing. The first act of the AI video wave has been largely determined by technologists and tech enthusiasts searching for fidelity by imitating existing forms. As this technology gets into more hands, the second act may have a different shape.

So what’s next?

As more filmmakers use AI video models, we’re seeing videos that are less concerned with demonstrating the technical edge of the models, and more concerned with doing something interesting.

As the trend continues, I expect the most differentiated AI videos will not come from the artists with the most pimped out workflows, or the artists rendering the highest resolution images, it will come from the artists willing to try very different things.

At the backs of these artists is, of course, constantly improving technology. The newest generation of video models (Luma, Kling, etc.) are proving quite good. Meanwhile there’s been exciting, smaller breakthroughs in controlling characters with Live Portrait and Hedra. Control, as I’ve tried to hit home, is much more important than fidelity. Filmmakers will gladly trade realism for control.

Last November we organized a screening at Sony Studios of what we thought were exemplary films made using AI. It was an important time capsule of where the space was, technically and artistically. This year’s screening will showcase this second wave of AI media. I hope it will serve as a similar snapshot of the moment.

For the rest of 2024, I expect we’ll start seeing AI videos branch out from familiar formats like narrated lists and into less familiar stuff. These videos will look weird at first, the same way TikToks would confuse someone in 2002. But gradually they will become a normalized part of our media diets.

So please, no more fake movie trailers. I’ve seen Harry Potter as a mafioso, as a anime character, as a Soviet Russian. I’ve even seen Harry Potter as directed by Wes Anderson. I don’t need to see any more fake movie trailers.

I’d like to see your idea. And if it’s weird or unfamiliar, all the better.

Filmmaker Magazine article on Damon Packard” by Deniz Tortum

Great article Mike! Wondering if you've seen the Unanswered Oddities series from Neural Viz? https://www.youtube.com/watch?v=YGyvLlPad8Q&t

What's extra weird (which you do explain) is that "Trailer for X if it were made in year Y" has been a YouTube cliche for at least a decade. It's AI solving a problem we very much do not have. As you say, it must come more from "well I made this content, now how can I stitch it together?"

Also 1950s Leia looks like Vivien Leigh to me.