AI Scams & Shams: Scaling the wrong ideas

AI enables people to launch ventures more easily. Many are shoddy businesses, scams, & low value enterprises. Let's look at a few.

A lot of people ask What can AI do? I prefer to ask What can people do with AI?

And the answer is people can do a lot more, faster, and with less resources.

I’ve written about the positive potential of generative AI, especially in filmmaking. Today I’m writing about the negative potential. Specifically how AI can multiply small acts of short-sighted greed.

Most ventures require resources– people, equipment, time, money. And getting resources requires a reputation, some track record of pro-social behavior and past success. These requirements often disqualify people on the basis of character or circumstance— shy people, non-native speakers, misunderstood eccentrics have all historically failed to get a shot. But these requirements also barred scammers and lazy opportunists. As much as gatekeepers are the enemies of progress, they do a decent job filtering out nutjobs and con artists.

Generative AI is exciting because it’s a back door. While making a movie traditionally required a Kubrickian ego to rally the support of people and institutions, that’s no longer the case. Now everyone with a computer gets a shot.

But everyone means everyone. Including people with bad ideas. Everyone gets a digital workforce to carry out their whims. Just as online scams were a major subplot of the internet, scaling bad ideas will be major subplot of the generative AI boom.

Projects of Dubious Value

So what are people doing with AI? They’re launching enterprises all the time, all over the world, in every field. People are writing books, building AI assistants, creating comics, launching services, and more.

I observe the activity in this space closely. I enable a lot too through my work. But not all of it is well thought-out. I’ve seen a handful of what could generously be called low-effort projects of dubious value in pursuit of profit. Other people might call these “scams”. I don’t think that quite captures what’s going on.

Whatever we call them, these projects are clarifying some accelerating trends for me. The macro trend is that AI lets people execute on ideas with minimal outside help. It’s exciting when the project is a film or business. Less exciting when it’s an ill-advised cash grab.

Here are a few patterns I’ve noticed.

AI can make stuff really easily

AI can act instantly

AI makes high-effort, low-return work worthwhile

AI lowers the value of images and muddies their signal

People associate ‘polished images’ with ‘legitimacy’ and this is a vulnerability.

AI entrepreneurs underestimate the difficulty of bridging digital success into the physical world.

Today I want to write about these by detailing three, shall we say, Projects of Dubious Value and Minimal Effort. Each involves someone executing quickly on a poor idea to make money.

AI Authorship & Knockoffs

First let’s talk about AI-generated books. Many writers are pissed about this. They’ve spent a lifetime building up a professional ‘moat’ of expertise. But suddenly strangers can recycle their knowledge and generate books in less time than it takes to cook a frozen pizza. This topic is being debated all over the internet. Generally, I don’t think it’s a big problem until you combine it with SEO hijacking and mild identity theft.

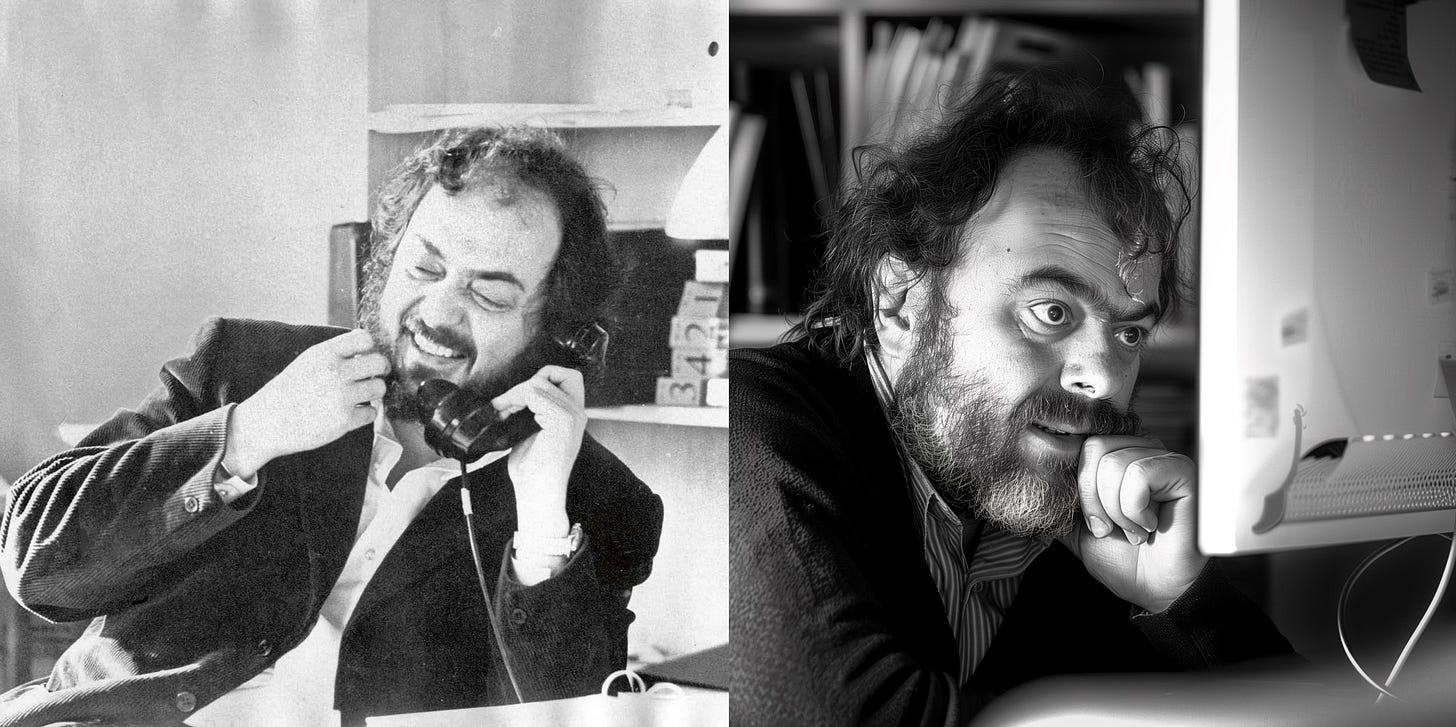

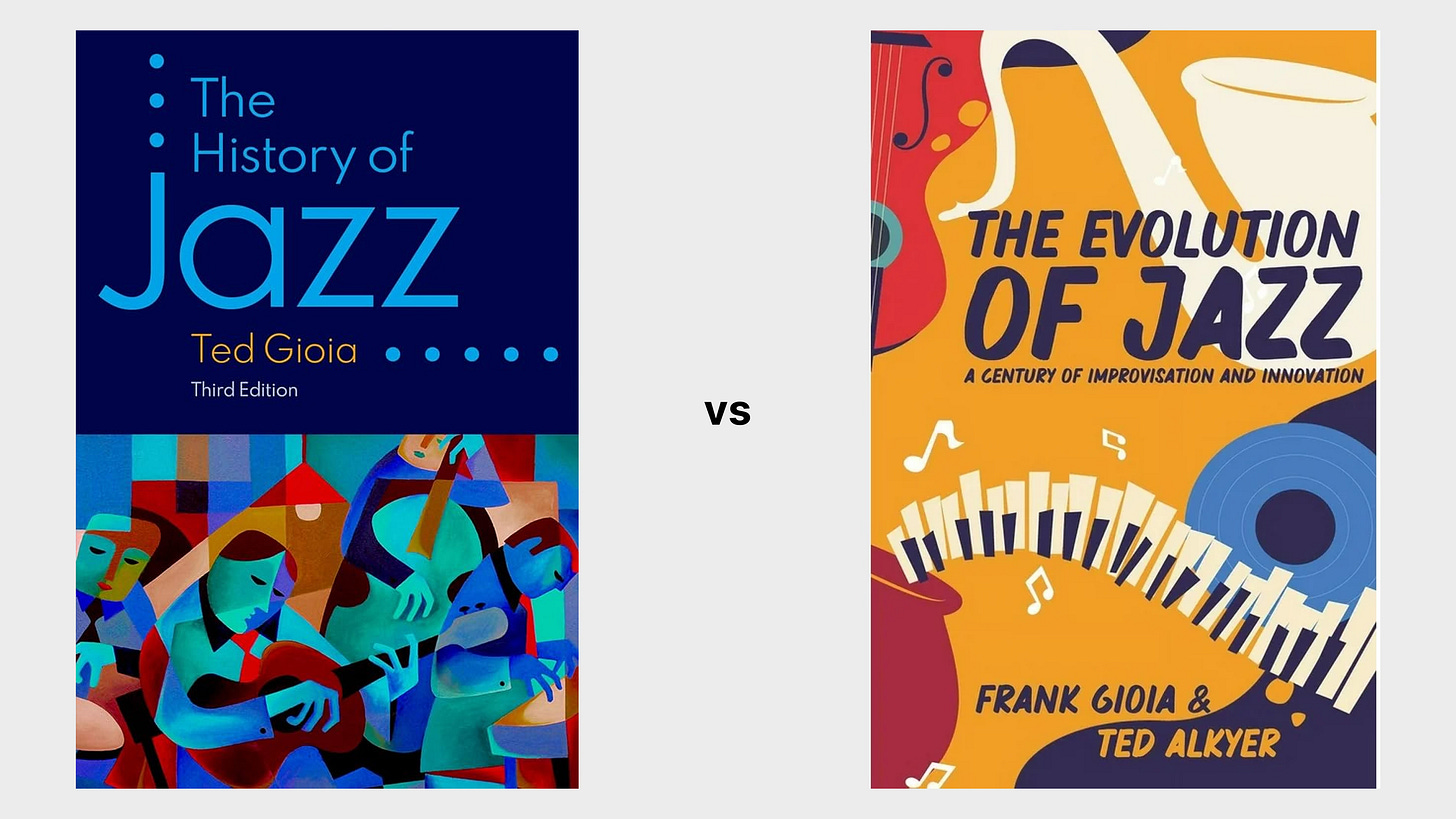

My uncle Ted Gioia writes books about jazz. He’s popular enough that algorithms probably recommend his books to people who like jazz. And people probably google phrases like ‘Gioia jazz book’. He’s been writing books for 30+ years now, and been reading, researching and listening for his entire life.

Then Frank Gioia started writing books about jazz. At an astonishing rate. And with similar cover art and titles. He was even co-authoring with Ted Alkyer (who sounds very similar to another established jazz writer, Frank Alkyer).

What’s going on here? Well, I can hazard a pretty good guess.

Someone rewrote Ted’s book with AI, then published it under a slightly different title with the intention of confusing people and algorithms into mistaken purchases.

Here’s an excerpt Frank Gioia’s book:

Feel the rhythm of "The History of Jazz Music," a soul-stirring exploration into the rich tapestry of jazz from its humble beginnings to its status as a global phenomenon. This book is an invitation to swing through the epochs of musical innovation, where improvisation meets inspiration, and every page sings with the spirit of jazz.

Despite the fact the blurb confuses the title, there are obvious GPT-isms here. Dear readers, I am warning you to be suspicious of the word “tapestry” from hereon.

This book-recycling hustle wouldn’t be worth the effort normally. Manually paraphrasing a book is a time-consuming task with low expected return. But AI makes it easy enough to be worthwhile. All Frank Gioia had to do was copy the book’s text, paste it section-by-section into an LLM, and give it instructions like “Rewrite the following book section using new words but keeping the same information.”

I suspect Ted wasn’t his only target. Frank Gioia probably programmatically scraped and rewrote hundreds of popular titles under false names. From what I can tell, Frank’s jazz book was a financial wash. It was removed from Amazon fairly quickly, though you can still see it sitting with zero reviews on WalMart. But across a couple hundred or thousand titles, Frank probably got enough hits to make his score.

Generating books with AI is an unfortunately common idea among AI impresarios. It’s not inherently evil, but it is low value if you aren’t adding substantial new inputs. And it speaks to a certain casual attitude towards quality. In the next example we’ll see what happens when the same attitude is brought to real world projects.

Candy-Coated False Advertising

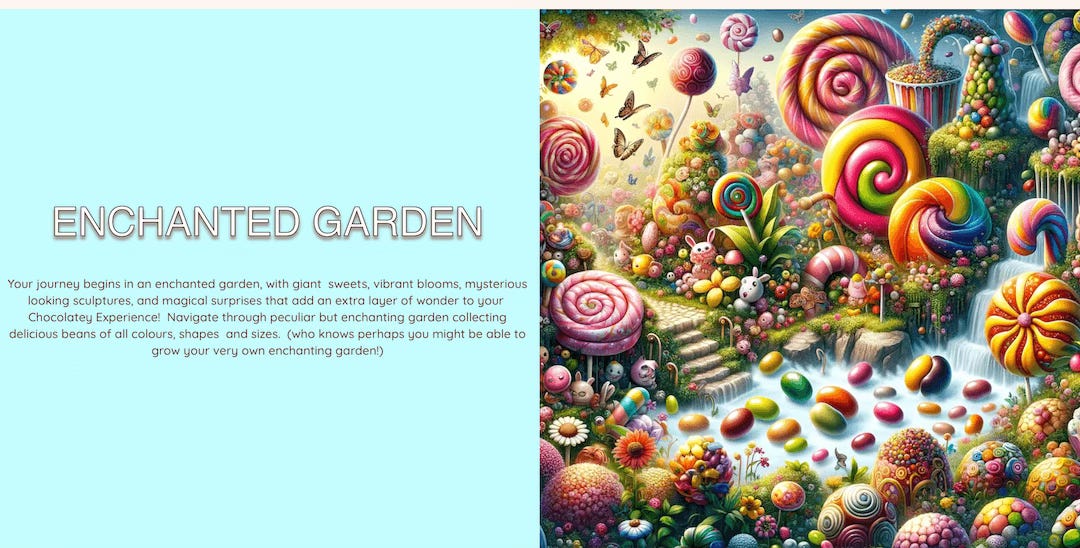

Scottish AI entrepreneur Billy Coul had already published over 12 AI-generated genre novels when he decided his next project would be hosting a live ticketed Willy Wonka Wonderland event in Glasgow. He didn’t have the resources or experience to put on a live event, but he did know how to create marketing materials with AI. So that’s what he did.

And it worked! Flocks of parents bought $35 tickets to his live experience.

But when attendees arrived, they found a sparsely decorated warehouse that was barely Wonkified. Quarter-cups of juice sat on folding tables with bargain-rate oompa loompas frowning over them. The event was one disappointment after another that resulted in angry parents demanding refunds and a social media revenge mob pilloring the Wonka imitator. People were furious at this poor excuse for a Willy Wonka Wonderland.

Now you could argue Mr. Coul wasn’t misleading anyone. That marketing is just marketing. False advertising has been around forever! But there’s a reason this incident felt different.

Marketing is an indirect signal as much as an explicit message. If an event has polished marketing, we assume the organizers have the resources to deliver on the promise. Even if the marketing stinks, if it looks expensive people think “This is real”. The medium is the message.

People are very good at picking up signals from media. Within seconds we can tell a TikTok is a TikTok and a b-movie is a b-movie. People are so aware of this that filmmakers often aim for production value over narrative value. They want it to look expensive, because that’s the signal they care about sending. And traditionally, it’s hard to make stuff look expensive! So it’s not a bad signal to send.

But generative AI scatters this signal. The majority of people haven’t internalized this idea yet. When parents see a Wonka Wonderland advertised with custom marketing materials, they assume it’s real and there’s real effort behind it. But in reality, the Imitation Wonka probably spent mere minutes generating the marketing art.

His mistake came when he brought the same casual effort to the physical event planning. I don’t think Mr. Coul deliberately under-delivered a crap event. AI could help him with digital marketing. It just couldn’t help with physical event planning.

Let me pull out the takeaway here. Polished images are no longer a signal of competence or resources. False advertising has always been a problem, but it still required effort and thus still held a signal. Now it’s too easy. As I’ve written about before tens of billions AI images have been generated. Very soon, most images we see will be AI-generated. This is a major update we need to make to our perception.

Obituary Attention Traps

Ranking first in google search has always been a dark art. Now it’s gotten darker.

Obituaries are an odd genre of necessary writing. Whenever someone dies, their obituary is high-demand, very suddenly. In an attention economy, that’s valuable.

Recently, there’s been a flood of low-quality, error-prone obituaries for very commonplace people. Many people report googling a deceased friend’s name and finding obituaries on strange websites that seem to say “a lot without really saying anything at all”. The consensus is they’re AI-generated. This is the rare AI development where my dad actually informed me (Sidenote: 2 out of 3 items in this article were reported to me by older relatives).

The business model is as follows: wait for public death announcements from mortuaries, programmatically scrape publicly available data about the deceased person, then instantly spin up a low-quality article. If you’re first, people will stumble into your website and their attention is yours to monetize.

The resulting traffic from a single obituary is too small to be worth anything. But at scale, it’s enough to be significant. 10,000 obituaries a day starts to make some kind of economic sense. Deceased names and life details are essentially as fertilizer for clickbait creation pipelines.

In the past, if you were gonna hunt attention, you would hunt big. Now, you can hunt small game. It makes sense to might write an obituary for a small town Mr. Smith because you can do it for every Mr. Smith across every town simultaneously.

The larger trend here is opportunistic news farming. We can expect a lot more of it. When there’s predictable news items, AI content will get there first. Poorly and with many errors. But it will be first.

Retune Your Perception

There are plenty of other misuses of AI. Many are more sophisticated and malicious: AI voice clones of loved ones, AI cat-fishing, the list goes on. Today I just focused on how it multiplies lazy short-term thinking.

We’ll likely see a lot more of this stuff. We can expect:

Effort put into areas where it previously wasn’t worth the effort. The cost of playing is a lot lower.

Visual polish will no longer be a very good signal. Marketing in general will be a less clear signal.

A lot of disasters will occur as digital success tries to bridge into physical world success.

It will be weird, but people will adjust. And as the old signals stop being useful, we will find new signals. If you have other examples that fit with this article, feel free to add them to the comments.

You've touched on an important concept here. So let's expand it beyond just AI.

As the knowledge explosion generates powers of ever greater scale, everybody is further empowered, including the bad guys. As the bad guys accumulate ever greater powers, they represent an ever larger threat to the system as a whole.

As example, generative AI might be considered a small potatoes threat compared to emerging genetic engineering technologies like CRISPR, which make genetic engineering ever easier, ever cheaper, and thus ever more accessible to ever more people. Imagine your next door neighbor cooking up new life forms in his garage workshop. What will the impact on the environment be when millions of relative amateurs are involved in such experimentation?

Discussion of such threats is typically very compartmentalized, with most experts and articles focusing on this or that particular threat. This is a loser's game that the experts play, because an accelerating knowledge explosion will continue to generate new threats faster than we can figure out what to do about existing threats. 75 years after the invention of nuclear weapons we still don't have a clue what to do about them.

If there is a solution (debatable) it is to switch the focus away from complex details and towards the simple bottom line. The two primary threat factors are:

1) An accelerating knowledge explosion

2) Violent men

If an accelerating knowledge continues to provide violent men with ever more powers of ever greater scale, the miracle of the modern world is doomed. Somehow that marriage has to be broken up.

A key misunderstanding is the wishful thinking notion that the good guys will also be further empowered, and thus can keep the bad guys in check as the knowledge explosion proceeds. That's 19th century thinking. We should rid ourselves of such outdated ideas asap.

As nuclear weapons so clearly demonstrate, as the scale of powers grows, the bad guys are increasingly in a position to bring the entire system down before the good guys have a chance to respond. One bad day, game over.

You are right to focus on the scale of power involved in generative AI, and how bad actors will take advantage of it. Let's take that insight and build upon it.

If the above is of interest, here are two follow on articles:

Knowledge Explosion: https://www.tannytalk.com/p/our-relationship-with-knowledge

Violent Men: https://www.tannytalk.com/s/peace

Phil (below) writes, "Discussion of such threats is typically very compartmentalized," and Mike says, "There are plenty of other misuses of AI," and invites us to list "others" in the comments.

I've read a number of articles (here on Substack and other places) on the possible pros and likely cons of AI, but they tend to warn us of how "bad" people will trick us into thinking this art is real, or this song, or this news article. We complain about AI interfering with our need to talk to a REAL person at the credit card company, or internet provider.

What I don't hear many alarmed about is the likely disruption of our election process this November. I was at a meeting with our State Representative, who happens to chair the Technology and Infrastructure Innovation Committee at our capitol. He is more than concerned - in fact convinced - that AI will be injected into the process by people (bad guys) and nations (bad countries). It will not only undermine the integrity of the outcome but erode the confidence we have in our ability to have a voice in our representative government. There are already too many Americans who have lost that confidence. The misuse of AI by those who feel their ideology is more important than our freedoms will have the potential of harm us far greater than those issues we tend to compartmentalize.

Mike ends saying, "We’ll likely see a lot more of this stuff." True statement. It's coming, and we need to be aware of the consequences.