“AI filmmaking” is an awkward term. It raises more questions than it answers. Try it in conversation and someone will invariably repeat back, “What’s AI filmmaking?”

The truth is “AI films” cover such a broad territory of media— from videos generated completely by software to films made by 30-person crews— that most answers are about as helpful as explaining what digital filmmaking is.

In the broadest sense, AI filmmaking points to any media that includes images produced with generative AI. But that’s a lot of stuff. A real answer requires a tour of the territory. And that’s what I’ll attempt in this piece: an armchair taxonomy of AI films.

Most AI media I see fits into one of five buckets.

I have my favorites. I’m sure you’ll have yours. Let’s look at each one. You can jump to a section with clicking these 1, 2, 3, 4, 5.

1. AI-Generated Still Images

Still images generated with AI using text descriptions, or reference images + text descriptions.

This category refers to still images created using AI models like Midjourney, DALL-E, and Stable Diffusion. Obviously, still images don’t qualify as film, but single frames are the foundation of film. AI film, just like normal film, is just a succession of still images. So let’s give AI-generated stills a paragraph or two. They will be the basis of everything to follow.

AI-generated images were initially novel but are now widespread. The image engines have been humming and we’re already at McPicture status— over a billion AI images served. Some reports estimate 15 billion images have been generated with AI to date. Anecdotally, it feels accurate. In 2022, I only saw AI-generated images on niche feeds. Now in January 2024, I regularly see AI-generated images in the wild— in ads, YouTube thumbnails, blog art, event invitations, profile pictures, etc. Chances are you’ve seen plenty of these 15 billion images, whether you’ve known it or not.

Quality is quickly catching up with quantity. In early 2023, AI image models had real restraints— they struggled with hands, photorealism, and certain textures. Now they’ve cleared most of these hurdles. Each new version of MidJourney (a popular AI model for creating images) has been substantially better than the last. The progress over 18 months is remarkable.

While you see a lot of cool pictures, you don’t see many compelling concepts in this category. However, some entertaining formats have emerged for storytelling. The most popular is the X as Y format where people mash-up contrasting IPs or aesthetics like Family Guy as a multicam 80s sitcom or global elites as homeless people.

Ok, so why are “AI-generated still images” a category of AI film? Well, filmmakers are making videos with them. You can now make images via a conversational format, which is easier to screen record and allows for actual storytelling through progressive instructions. Storytelling forms are emerging that are legitimately fun to watch. I recommend this video about a a cat’s fish heist gone wrong.

I’ve collected more examples here.

2. AI-Animated Stills

Still images that are animated to move or speak.

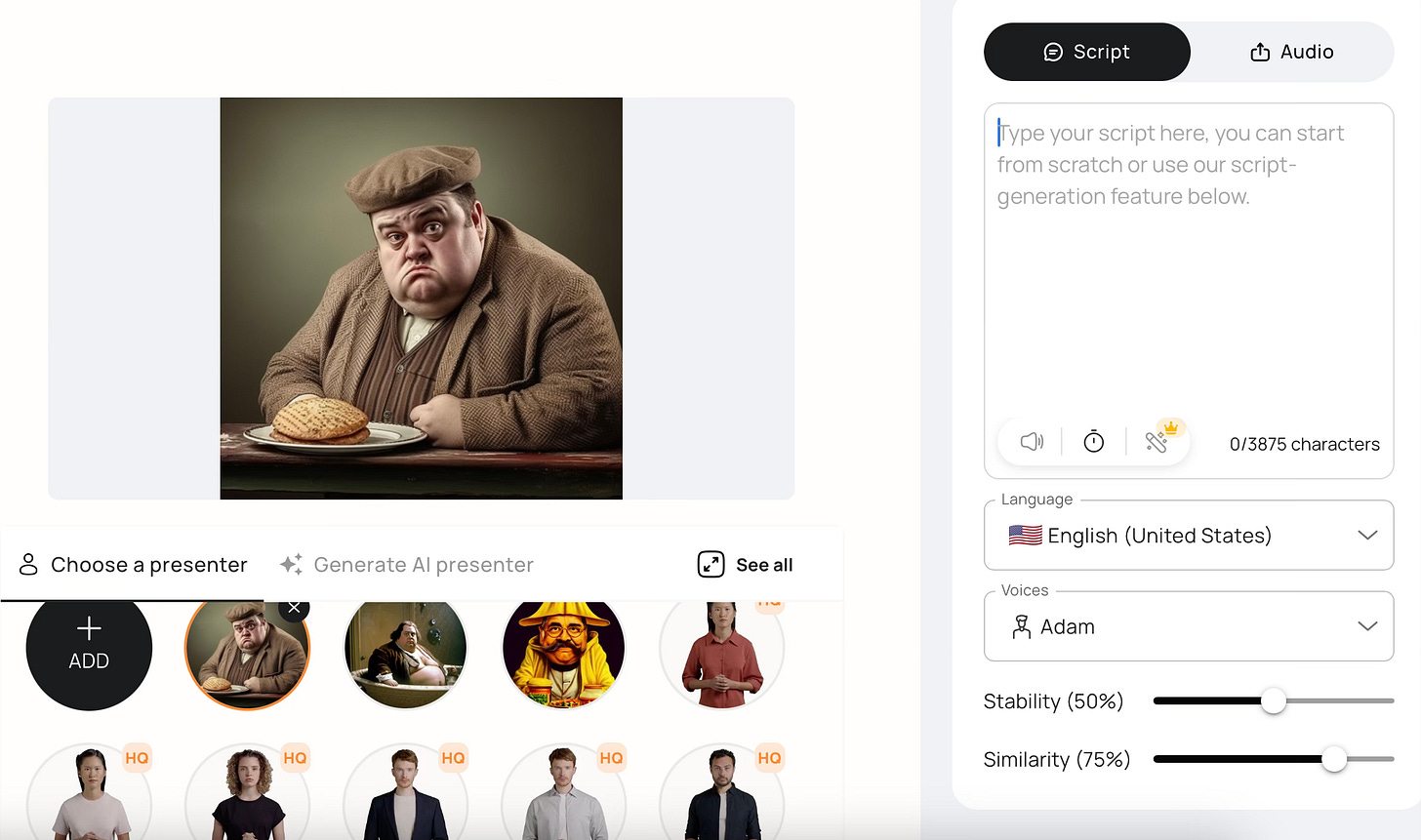

This category refers to images that are minimally animated using AI. Software like Studio D-ID and RunwayML can puppeteer images with simple movements– head tilts, blinking, camera push-ins, even mouth movement that’s synced to sound (i.e. talking). These animations can’t introduce new elements or control complex movements very well. They just move existing parts of the frame in a simple, predictable way. Great for making a character sway slightly, not so good at making them dance. The most common use cases are making a character talk or adding slight cinematic push-ins to images.

These techniques allow people turn images into what technically qualifies as video content. With 15 billion AI images out there, there’s no shortage of appetite for this.

We’ve seen some impressively creative stuff in this category as filmmakers operate within annoying limits to make stuff that ‘works’ as video. These constraints have created interesting aesthetics. The limitations have caused many to focus on a slow cinema with lingering shots and minimal movement. Notice how still each shot is in this Barbenheimer trailer. There’s a good argument that a major branch of AI film will be slow and still.

Of all the categories of AI film, this is the most watchable. Most viral AI videos are in this category. It’s also the most accessible for artists. You can do it on a normal laptop with software that’s less than $20/month. The workflow is simple too: generate still images, animate them into video clips, edit them together.

I’m personally fascinated with this category because it emerged accidentally, cobbled together by artists eager to ‘get to’ AI video. For new entrants, I recommend starting here.

More examples.

3. AI-Rotoscoped Videos

Real video rotoscoped frame-by-frame with AI.

This category refers to AI-generated video that is based, frame by frame, on real source video. If you’re familiar with rotoscoping from films like Scanner Darkly or Waking Life, this is a pretty good analogy. You provide source video and text instructions to an AI model, then it recreates each frame with some added eccentricities, like a kid with tracing paper. You can do stylized rotoscoping where each frame is re-rendered in a new style (live-action to animation). Or transformative rotoscoping where elements of a frame are transformed into totally new objects (your kitchen to the Temple of Doom).

For this category, it’s important to remember ‘video’ is just 24 photographs played back over one second to create the illusion of continuity. When you feed a video to an AI model, it sees it as a sequence of individual frames and generates a corresponding image for each frame.

This category overcomes many of generative AI’s shortcomings. While fantastic at generating single images, AI models struggle with series of connected images that, frame after frame, make sense chronologically and physically. For example, the AI model does not understand physics. It’s only gleaned a fuzzy idea of physics. It’s seen images of a ball falling with the label “ball falling”, but it doesn’t understand the laws of gravity that actually cause the ball to fall.

AI-Rotoscoping avoids these limitations by using the real world as a physics engine. With the frame-by-frame guidance of a video shot in the real world (where gravity, time, and your own dramatic intentions exist), you’re less likely to encounter unwanted glitches like someone’s mouth disappearing or gravity reversing. You can exert real control and aren’t at the whim of the AI’s warped guess at a plausible next frame.

I’m enthusiastic about this workflow. It allows artists to control the script, acting, and direction, while outsourcing production design, locations, and expensive stuff to AI. Some filmmakers are shooting scenes in homemade sets and then rotoscoping it with AI to be anything. It’s a happy marriage that avoids the limitations of both AI and your basement.

No-budget filmmakers may flock to this category as a cheap way to overcome low production value while allowing them to still work in familiar mediums. Folkfilm legend Joel Haver has been using early versions of this technology to create funny animated videos for several years. He’s made dozens of these AI-rotoscoped videos, gone viral, and even been offered TV deals. All for $0.

More examples.

4. AI / Live-Action Hybrid

Photorealistic AI images blended seamlessly into real footage.

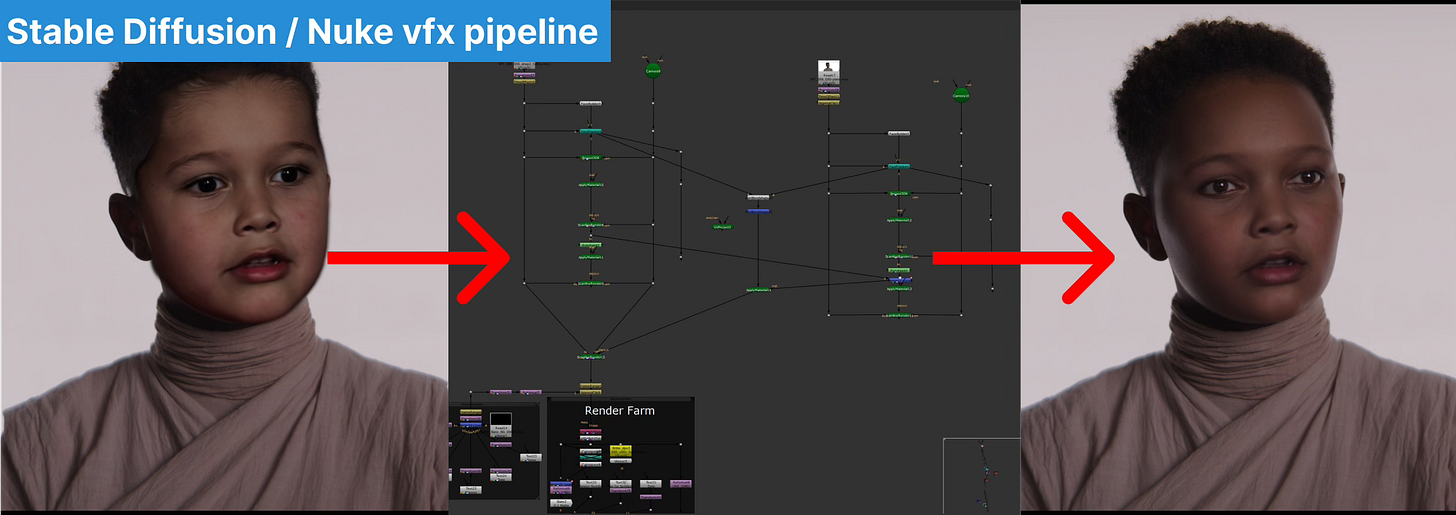

This category refers to live-action footage blended with AI-generated images. Combining real footage and digitally-generated footage is an extension of what Hollywood already does with CGI (computer generated imagery). And it’s really hard! When a frame is mostly real footage, there is a high bar of quality demanded of anything that will be added. If any part of the frame looks bad, then the whole frame looks silly. Success in this category means the AI visuals look indistinguishable from the real footage.

The most familiar example in this category are deepfakes, digitally created faces that are blended into a real person’s face and constantly readjusted to ‘stay on’. You hear rumors of this technology allowing Harrison Ford to star in movies forever. It’s certainly plausible. However, the current use cases look more like Youtubers putting Arnold Schwarzenegger in every movie. Hollywood, a face-obsessed industry, will likely play in this sandbox with some strange motivations.

Deepfakes are the easiest media to point to here, but the category is much broader. We haven’t seen very diverse examples here yet, because the difficulty of this category makes it less accessible to low-stakes experimentation.

Paul Trillo is an early and active innovator here. He regularly posts tests on social media like AI-generated objects appearing on a table. We can debate how well he achieves the illusion, but clearly the intention is for total photorealism and he’s getting closer every month. His music video “Absolve” is a sort of AI thesis film that combines dozens of tricks.

Doing this category moderately well isn’t that hard. Doing it very well, is. Highly realistic AI video doesn’t exist without tons of post-production skill and labor. You run into minute problems that aren’t issues for YouTube videos, but are dealbreakers for high-production value films. I spent 8 months of my life producing a film striving for this benchmark. Generating the AI visuals took a few hours. Compositing them into the movie in a convincingly photorealistic way took months. Fortunately we worked with an indie VFX company Digital Sorbet, part of a new generation of VFX companies specializing in combining generative AI into the traditional VFX pipeline.

I predict Hollywood and the traditional film industry will gravitate towards this category. It’s harder, more cost-prohibitive, and smells like the CGI they’ve been doing for the last 35 years. Hollywood won’t have the appetite for other categories of weirder AI filmmaking, especially if it’s the same process a YouTuber in Minnesota can do. The incumbent film industry will crave higher barrier-to-entry areas. And this one’s the hardest because it demands a high level of realism.

More examples.

5. Synthetic AI Media

Video completely generated with AI.

This category refers to fully synthetic video, generated entirely with AI. Unlike AI-rotoscoped video, this has no frame-by-frame guidance. You offer an initial prompt (text or a reference image) then leave the AI to determine each subsequent frame. This category is the frontier people are attacking most excitedly. Both as enthusiasts and enemies. And it’s rapidly improving.

In early 2023, video generation with Stable Diffusion was clunky, frustrating, and produced extremely bizarre outputs. Left to its own devices, the model made puzzling choices for the next frame. Figuring out how to influence it was a dark art.

In 2024 companies like Runway and Pika Labs have streamlined it. They’ve put up guardrails to ensure video generation is much more consistent and user-friendly. I don’t totally understand what they’ve done, but the dark art has effectively been reduced to sliders and buttons. Open-sourced tools like Automatic1111 and ComfyUI have the taken the opposite approach and expanded the art into as many buttons and sliders as possible. Both make the process better and we’re seeing better outputs than ever. The pace of innovation promises a lot more improvements to come.

However, the best of these technologies still struggle with problems like temporal coherence and physics. As a filmmaker working in this category, you need to be okay with funky stuff happening out of your control. Paul Trillo’s totally synthetic film Thank You For Not Answering makes a virtue of the AI aesthetic, which is clearly on display as limbs, people, and backgrounds fluctuate.

There’s a lot of talent and capital working here but to me fully synthetic AI film feels intrinsically limited. Filmmakers want control. And this category feels a lot like pulling a slot machine. You can provide a beginning frame, but you don’t know what will happen. As director Jon Finger points out, filmmakers want input. In every sense of the word.

More examples.

Parting thoughts & an honorable mention

In the short-term Category 2 (AI animations of still images) and Category 3 (AI-rotoscoping) are the most promising to me. I think they’ll find the most adoption and use cases. They give filmmakers more control. And they produce videos I actually watch and enjoy without thinking ‘oh this is AI-generated, how novel’. The other categories produce films that I find interesting as a measuring stick for AI quality, but don’t often enjoy.

I want to least mention NeRFs (Neural Radiance Fields) here as well. I consulted a lot of filmmakers for this post. Many mentioned NeRFs as an important category. A NeRF is an AI-rendered 3D model of a space. Basically you take 30 photos from 30 different angles, then create a 3D model that you can move around realistically. I don’t know enough about NeRFs or whether you can make a NeRF movie, but it’s certainly being used in films. At least for shots.

I may update this post in the future, so feel free to add critiques, nominations, and examples in the comments. If you have your own categories, please share them as well.

Thanks for breaking this down for us!

This is a good break-down! Now, consider the use of AI for the sound aspect of film making. Would be a great companion to this article 👍