Sending Sora to Film School

How a great cinematic data annotation might improve AI video models

AI video models are really good. They can make great shots. They just don’t make your shots.

It’s easy to generate clips that feel pulled from a real movie. But you can’t get the model to do what you want, when you want, how you want. More often than not, you feel like an interloper rather than a director.

Certainly we humans can get better at AI-whispering. I would argue, however, that the fault lies not in ourselves but in our models. Current video models give a narrow, awkward window of control. They are stubborn and uncooperative as collaborators.

We all acknowledge ‘the models will get better’. And they will. But how exactly will they get better?

AI researchers claim that AI models magically improve as you add more underlying compute (GPUs). Historically, that’s true. But that’s a boring answer. Let’s ignore it for the purposes of a fun essay. I promise we’ll pick it up in the end.

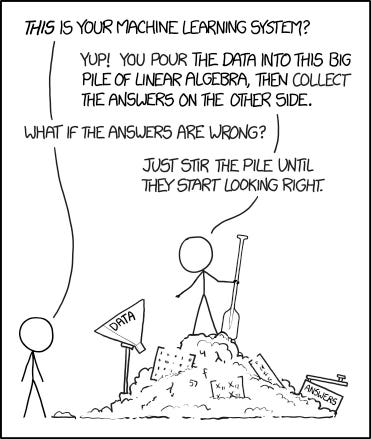

Besides magic stirring, how else can AI video models get better? They can train more, but they’ve already trained on everything— movies, newsreels, most of Youtube. The other idea is the video models can train smarter. They can get serious about their education. Choose a focus. The AI models need to go to film school.

This essay is a rough take on what that could look like.

Sections:

The Status Quo

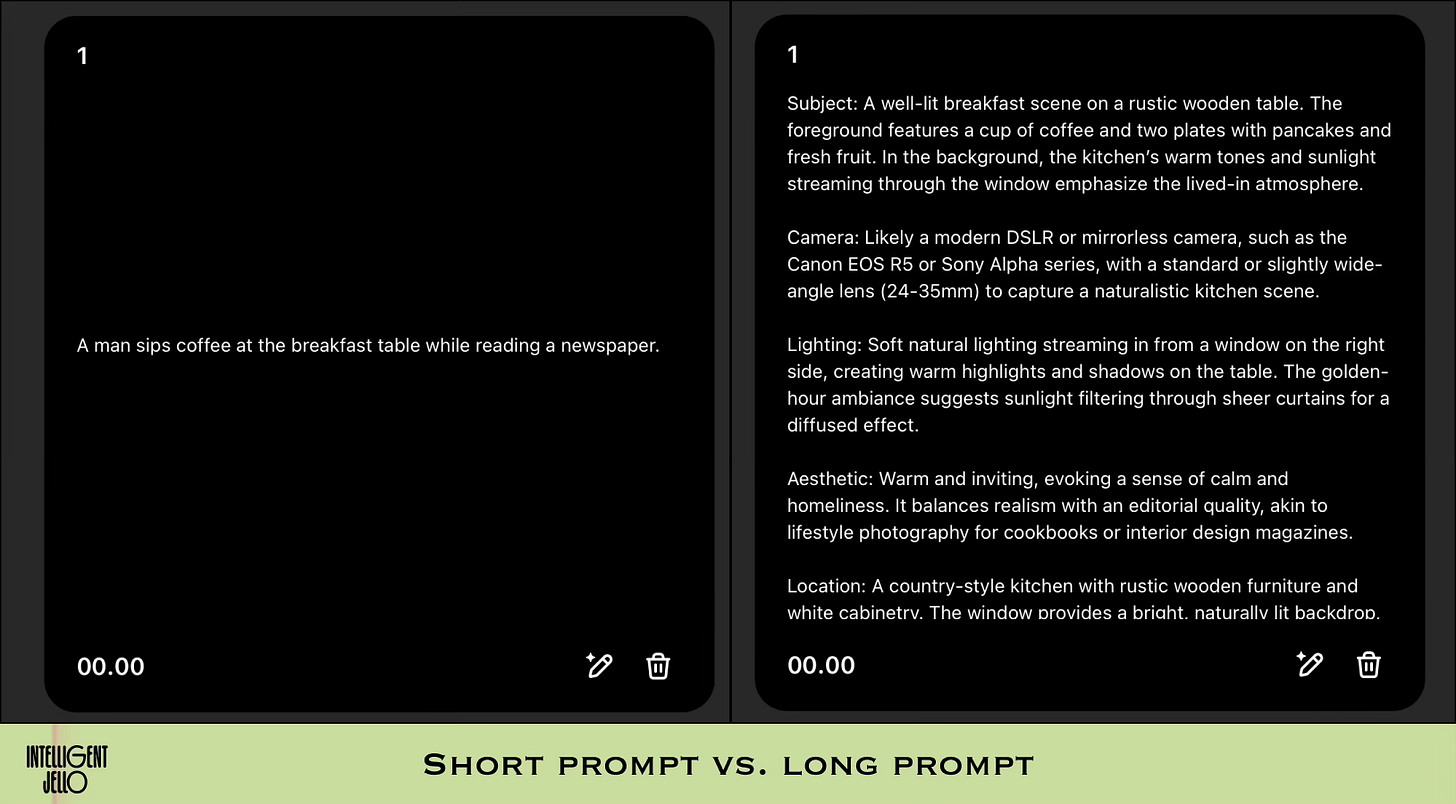

Right now we control video models mostly through text prompting. We describe what they should create and they create it. We can also provide a reference image. Or even a reference video.

There is no established vocabulary for controlling video models. People try all sorts of things. Some people write very simple prompts. Some write very detailed ones. Some use AI to write the prompts. One friend even swears by the idea of no prompt. He provides a reference image and no instructions because ‘the model knows best’. There is no consensus on how to prompt image models.

How a video model reacts to prompts is informed by its early life experiences. Researchers train it to react in certain ways through reinforcement learning. We underrate the influence these early value systems have.

Large Language Models (LLMs) are a helpful parallel. LLMs were originally completion models— they looked at text and simply continued it. LLMs functioned this way up through GPT-3. Then ChatGPT (GPT-3.5) was trained to handle everything as a conversation. This chat paradigm was instantly popular with consumers. Over 100 million people signed up in two months. ChatGPT wasn’t much smarter than GPT-3, but it was way more usable because it was trained to ‘chat with you’ rather than complete your text. People greatly preferred that.

Our film school will have a similar goal— make video models more reactive to the way people intuitively want to direct.

This leads us to a good question. How do these models learn?

Training an AI image model

Currently, AI models train the same way 5th graders study for a history test— with flashcards.

You train an AI model by showing it input-output pairs, usually on the scale of hundreds of millions. For example, you might show it a photo of the Roman Colosseum paired with the text description “The Roman Colosseum”. The description could also be longer like “The Roman Colosseum, a massive ancient amphitheater with its iconic elliptical shape and multi-leveled arches…”

Sometimes the flashcard has multiple things in a frame. In these cases, you annotate the image with boxes and other shapes to distinguish different parts of the frame.

That’s the basic idea. The model ‘looks at’ an image and a text description. While LLMs see words as tokens (clusters of letters), image models see pictures as patches of visual tokens. Over billions of flashcards, they start to map these patches with clusters of language.

There are two types of training. First, there is training a foundational model, which is very hard and requires an ocean of data. Then there is fine-tuning, which is taking an existing model and adjusting it to behave a certain way. For example, if you fine-tune an image model on one-million watercolors, it will learn ‘watercolor’ as the default style. So when a user asks for “a picture of a flower,” the output will be a watercolor by default.

Importantly, training is all based on images and text descriptions. This process makes a lot of sense for still images. It’s a harder to imagine for videos.

Training a video model

Videos, unlikes images, are not static. They change. A photo of the Colosseum remains the same, but a video of the Colosseum can change in any number of ways. The succession of images a video model generates has to convey a lot— movement, intentions, physics, even editorial choices like cuts.

Despite all this complexity, we still turn videos into flashcards. Or we try to.

The model watches a video and then reads a caption that describes the video. One representative method is called Differential Sliding-Window Captioning. The process relies heavily on two very imprecise tools— AI and human language.

Here’s how it works:

They take a video. It can be 5-seconds or 50-seconds.

They extract the keyframes of the video (every frame where the image noticeably changes, like someone entering frame).

They compare keyframes and write a description about how each keyframe is different from the previous one (i.e. what has ‘happened’ in-between them).

They compile all these descriptions in chronological order.

They summarize these into a singe description for the entire video (called the ‘caption’).

“They” refers to an AI— a series of specialized AI models do every step of this process.

The captions are, admittedly, quite descriptive. Often better than what I would’ve written. But one wonders whether an unadventurous research tone can satisfactorily educate a video model. And how effectively can a symbolic system like language teach a video model about cinematography and physics? How tall of a tower can we build on the flimsy scaffolding of language?

That’s an interesting question for another essay. For now, let’s keep thinking about film school.

Learning to watch cinema

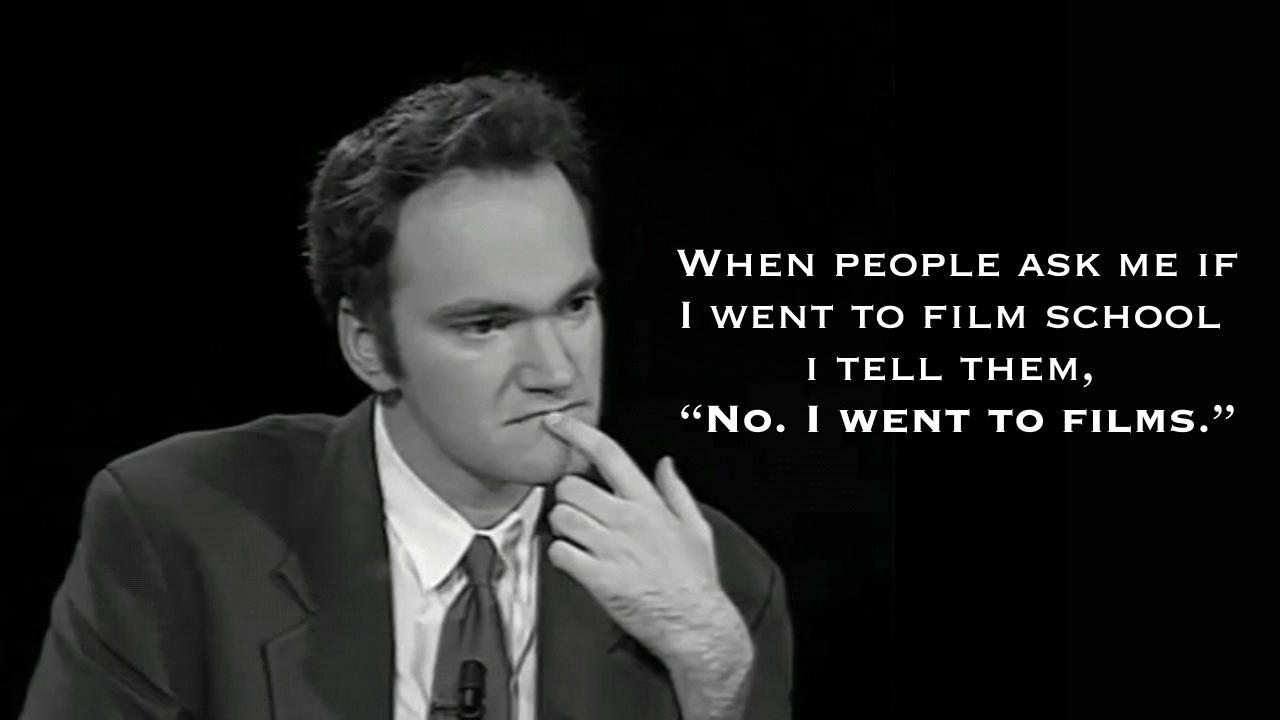

Now that we have some insight into how video models are trained, the question is how can we send one to film school? The logical answer is they need to watch more movies. Quentin Tarantino agrees, more or less.

The truth is these models have probably trained on all the movies out there— legally or illegally. Each year adds about 100,000 minutes of theatrically released cinema compared to 15.5 billion minutes of Youtube videos. Cinema is the minority of video.

So if we’ve already fed all the cinematic video to a model, our next option is to feed it in again. But better. The other side of the flashcard needs to be labeled in a way that teaches the model something useful.

A director once told me, “We’re in the business of image-making. We make images”. The quote stuck with me. Filmmaking is the business of planning and executing really specific photographs. A lot of money, planning, and labor go into every pixel of these images. As a result there is an enormous amount of documentation for these images. Specialized departments create storyboards, shot lists, lighting set-ups, lens specifications, etc. in preparation for making that image.

So you might say filmmaking is:

specialized equipment

operated by special departments

according to a special grammar

in order to photograph people and objects in space

In this vein, sending Sora to film school might consist of:

Annotating video with better metadata (equipment like lenses, lights, etc.)

Rewatching clips multiple times as different roles (departments)

Establishing a consistent prompting language through fine-tuning (grammar)

Training the model to treat people, objects, and scenery as discrete parts of an image so that it’s easier to direct.

Let’s quickly discuss each aspect.

1. Better Metadata

To the extent that film crews point tools (cameras and lights) at stuff (people and props), you can document these exact tools and their exact settings. This information includes everything from the focal length of the lens to the lighting setup and wardrobe.

Meticulous metadata doesn’t exist for most video, but it does for film productions. Adding more data, more systematically would help train our model.

2. Persona-Based Rewatching

Screenplays fail when they attempt to dictate not just dialogue, but also all the choreography, art direction, and minutiae. Screenwriters are gently told to relax because those details are someone else’s job.

Similarly, a monolithic description of a video misses a lot. If we use generic captioning models (the AIs that write descriptions of videos) we can expect generic descriptions. Instead, ten different models can watch the same video from ten different perspectives. One as a cinematographer to focus on lighting and camera movement; one as a production designer to focus on color palette and spatial relationships; one as an editor to focus on rhythm and narrative flow. Each viewing would add a layer to the model’s understanding of the scene.

Compartmentalizing their expertise gives us a chance to create formal language around parts of image-making. Which leads to…

3. Well-Defined Prompting Language

Fine-tuning makes AI more predictable. ChatGPT was far more useful than its GPT-3 predecessor because it wasn’t just a completion engine—it was trained to act like a conversational partner. It knew how to respond, what to refuse, and when to add polite filler.

The same idea applies to video models. If we train them to respond to a structured language, prompting becomes less of a dark art and more of a predictable system.

Directors want control over specific parts of the image (the set should look like this, the actors should do this, etc). People want to direct video models the same way. But they can’t because video models don’t understand discrete objects within an image except in a very general way that’s tied to the entire frame. If we train models in specialized perspectives, we can also train them to respond in those distinct modes. A director could call on each as needed (@set_designer do this or @cinematographer do that). Each would follow your directions in different, pre-trained ways.

Siloed expertise would, in turn, allow new paradigms for humans using the model.

4. New Interfaces for Using Video Models

Better training will allow the model to respond to new ways of prompting it.

A modular interface would let people create scenes piece by piece. The first prompt might establish the visual environment: "A lousy LA apartment covered in fast food wrappers." The second could define camera dynamics: "Slow, deliberate dolly movement." A third prompt could specify character action: "A disheveled wastoid in flannel rolls over on the couch to reach for a slice of a pizza."

We’re already seeing infant versions of this with features like Kling’s Elements, where users are able to upload characters, objects, and environments.

ChatGPT was a major improvement in usability for LLMs. People preferred talking to LLMs in a conversation. The goal of sending Sora to film school is to let people direct the way they want to. To make a video model that cooperates.

Creating the dataset

So how do we create the dataset? Here’s one approach. I was at a table recently with a film executive and an AI filmmaker. We discussed a Business Idea That Someone Will Do, But Not Us. This is a frequent pastime.

The concept was how a normal film school could be gamified into a training pipeline for video models. It basically worked like this:

License a library of movies and television.

Create software that lets students watch and tag clips in a fun, structured way.

Sell this software to film schools.

Students learn by tagging clips based on given instructions.

Collect all the tags into a big dataset.

Sell the dataset to AI video companies.

Adjust the tagging tasks to match AI models’ latest needs.

Repeat.

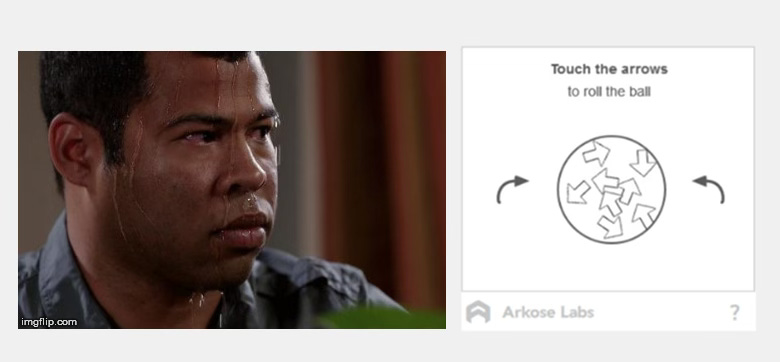

The idea is just a reskinned version of Google’s reCaptcha. As you may or may not know, every time you were given a captcha to prove you were human, you were actually labeling an image that was used to teach to an AI model. It was a clever way to crowdsource AI training as a real service. That’s why the captchas have become harder and harder. They need better and better data.

This process will exist soon, if it doesn’t already. And as a general rule, I expect we’ll see more disguised data labeling pipelines where people unknowingly train AI models. Be sure to check those terms and conditions.

The Big Obstacle

Our film school sounds fun, but fashionable AI opinion would reject our entire approach. The current wisdom advises, “Add more compute and keep on stirring.” AI doesn’t need a boutique film school if it can rewatch movies with more Nvidia chips. This is the bitter lesson that Rich Sutton famously articulated: researchers have a bias for approaches that are intuitive to humans, but real AI breakthroughs come from scaling compute. Elegant, human-centric approaches are promising in the short-term but trivial in the long-run. From this point-of-view, our entire enterprise may be a dead end.

Whether the lesson is true, however, is not as important as you think. There is a bigger obstacle to our project. And it’s very simple. No one knows the point of a video model.

AI systems are designed to follow goals. Chess models are superhuman because chess has a clear goal: checkmate the king. No one can make as clear a statement about film.

Without a goal, AI researchers optimize for photorealism as the closest approximation. But photorealism isn’t a real compass for where filmmakers want to go. Artists want to capture their intentions. Unfortunately, AI researchers don’t know how to articulate this concept. Our models won’t find their own purpose. We need to provide and reinforce it. It’s why more artists should work in the AI field.

So let’s revisit our project one more time. The point of sending Sora to film school isn’t just to learn.

Like any college student, it is to find direction.

Acknowledgments:

Thanks to for the title ‘Sending Sora to Film School’.

Thanks to for corresponding with me about AI video model training.

Thanks to Rich Sutton for corresponding with me about AI goals.

Great one Mike!

You persuaded me. Why not go ahead and start building this yourself?