Notes on Auto-Contenting

Wherever there's attention, content will appear.

AI videos are appearing in media feeds everywhere. Comment sections complain about them. Marketers explain how to make them. The president of the United States regularly retweets them. And it’s all only accelerating.

Many of these videos are made through the time and effort of a human using AI models. However, increasing amounts are made by automated systems. In my own life, I talk to people every week designing systems that generate content infinitely. From a commercial point-of-view the concept has an obvious appeal: take infinity shots on goal. One should go in, right?

Somewhere in these discussions you feel bad, as if a social contract has been broken. The correct reaction to this encroaching reality is hard to know. Today I wanted to sketch out my own line-of-thought when I consider the sheer quantity and quality of automated AI content coming today, tomorrow, and for the rest of time.

Here it goes…

Computers can read and write as well as people. Many think this means computers are people. Others wonder if people are just computers. I ask “What is language and why does it seem so important when computers can do it”?

Language, I remind myself, is not me. Despite mainly communicating with others via text/email/voice, I am not my words. Language is a way to point to the world. By running this unnatural operating system in our head, we can do cool things like share information with each other. Still, it’s surprising that we can run this same operating system on probabilistic AI models and get coherent outputs. I really didn’t expect that. Did you?

Language implies an intention. An intention implies a mind. When I see content online, I assume there’s an entity behind it with interests. This presumption makes us stop to consider the stuff we see online. AI-generated content takes advantage of this psychology. It’s why people react so poorly to AI slop. We feel like there’s nothing behind the content. It’s just an intention-less sponge made to soak up our attention.

In addition to language, computers have also cracked images, video, voice, and music. Despite lacking a body, computers can create digital content that is increasingly indistinguishable from what a human would make.

People seek attention anywhere it pools— trends, memes, current events. This fact has led to much mischief on the Internet. Empowered with AI, attention traffickers will wade into even deeper mischief.

AI-enabled internet hustlers love to volume spam. If they write 1,000 versions of an e-book, one might sell. Or if they write 1,000 versions of the same blog post, one could rank on Google. AI systems that automatically create this content are easier to build every day. As a result, increasingly less sophisticated entities are building them for increasingly dumber reasons. We might call operations like these “Auto-Contenting”.

Auto-Contenting: Using AI to generate customized content at high volumes to capture attention by sheer probability.

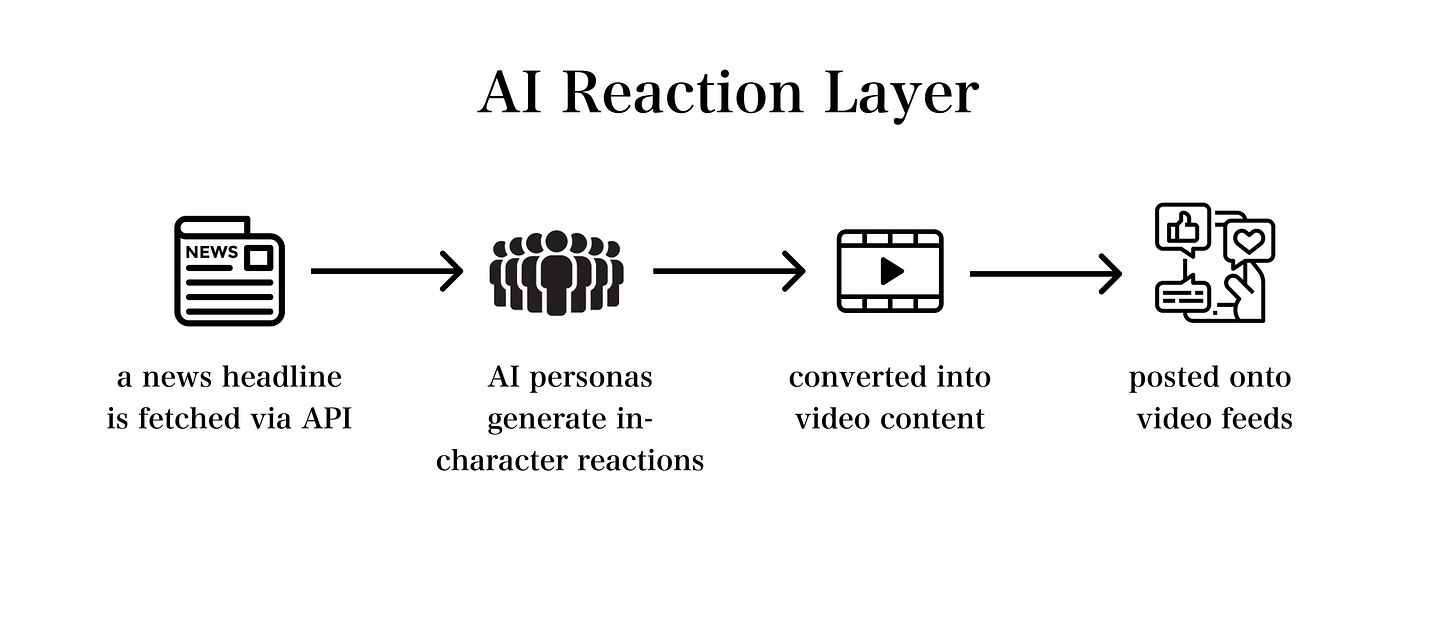

I think an AI Reaction Layer will emerge on the Internet that auto-reacts to the world. Its creation will be an emergent phenomenon caused by many auto-contenting systems working together. These AI systems will create reaction content to current events (fetched via API from the wire automatically) across near infinite personas. For example, an auto-contenting system might create daily videos of a plain-spoken southern conservative reacting to the latest political headlines. Importantly, the same auto-contenting system might also generate videos of a New England hippie, a filipino financial advisor, and a talking Weiner dog reacting to the same events. These systems will crave attention, not cultural sway. If there’s attention available for X persona doing Y thing, content will find the attention and absorb it. This may be a law of the internet: wherever there’s attention, content will appear.

A plausible law of the internet: wherever there’s attention, content will appear.

This Law of Attention already entraps many human creators. When a person makes content that fills an available attention gap, an algorithm rewards them with attention. “We liked your angry Boston man video, here are likes and views. Keep the supply up.” Platforms encourage people to keep fulfilling demand with the same type of content. The sad result is online creators are often incentivized to become flat caricatures of themselves. Attention is a powerful animating force, capable of hijacking humans as puppets.

We like ideas to travel develop across human networks. Even if monied interests or mimetic imitation sometimes puppeteer human creators, we are comforted by the idea that a person is reacting to new information organically. Synthetic faces proliferating ideas feels unsettling in a new way. But what difference exactly is there?

Widespread auto-contenting will make Attention perfectly efficient— supply will meet demand exactly. If auto-contenting systems fill every attention sink and there’s no available attention to capture, will people still make new content everyday to capture attention? If they’re not trying to capture attention, what will they be trying to do exactly? Will it be liberating or demoralizing? How much do I do for attention? I’m afraid to answer that because it’s probably a lot more than I think.

Attention is an animating force of digital content. Where there is attention, people make content. With auto-contenting systems, content will emerge automatically wherever there is attention.

It’s unclear how social media platforms will react to auto-contenting. They may shut down AI content from third-parties (Pinterest already has a ‘do not show me AI content’ toggle). They may auto-content themselves, though recall their business model is to distribute free content provided by their users. There is not a clear cost incentive for these platforms to spend money generating content they already get for free. Not unless it’s significantly more engaging to people.

Auto-contenting systems could create mass apathy towards content. If synthetic content covers every viewpoint on any topic just to win my attention, it’s likely I’ll care less about all of them. The real question is will I care less about real people’s opinions as well? Will I also see people’s opinions as tokens flowing through vessels? It seems increasingly likely to me that mass AI content will reveal how most content is cheap bait for our attention. So what exactly will endure?

You can prompt the same LLM twice and get two different responses. When you prompt a human twice, you’re alarmed if the answer isn’t consistent. A person is anchored to a point-of-view. We trust the human’s sampling methods.

Doug Shapiro says “Trust is the new oil”. And he’s very smart. When the scarcity/abundance of a resource changes, value will accrue to the scarce resource in a value chain. With infinite content representing every point-of-view, this model predicts trust will become the scarce unit.

I don’t fully understand what “trust” is. It’s my reputation, my friends, my friends’ reputations. Ultimately, you are not the tokens you use. The fact that you can say something matters very little. The fact that you choose to say it… does matter. Otherwise you’re just another puppet for inherited tokens.

… at this point in the train of thought, I don’t know where to go next.

Auto-contenting feels inevitable to me. I expect it to be implemented at a massive scale by diverse groups. I also expect auto-contenting to absorb all available cheap attention. And I expect this absorption will de-incentivize many things people do for attention on the internet because attention will be much harder to get. This puts media in strange, new territory.

The world is dynamic though and I’m confident a new game will emerge. I don’t know what.

It seems wise to start betting on trust and reputation.

Hi Mike. I like how you walked us through your train of thought and then stopped- meaning that you didn’t feel compelled to offer up an idea in a nice tidy package.

Thanks for the shoutout in this piece. As I wrote in Trust is the New Oil, I think about email as an analogy:

As an analogy, think about what’s happened to email over the last 20-30 years. In the late 1990s-early 2000s, it was still relatively novel to receive email and it was therefore high signal. Today, the noise has drowned out signal. We are overwhelmed by email spam, with spammers and email filters engaged in an never-ending cat-and-mouse game. Those filters are pretty good, but the costs to send bulk mail are so low that the spammers still have an economic incentive to try. Even with the best filters, spam slips through and, just as bad, legitimate emails get filtered out. (I recently found a trove of responses to the emails I send out from this Substack buried in my spam folder.)

The result is that email has been devalued as a communications medium. Click-through rates on legitimate emails have declined from 4-6% in 2010 to about 2% today. While it is still used at work and for a lot of marketing, its prior role has splintered into numerous apps: Discord, Insta, group chats, Slack, etc.

So, under this theory, open platforms like social collapse under the weight of all this stuff. Still used, but their utility will diminish. So, what’s the Discord/Telegram/Slack of content in the future?

Fantastic! 'Language is not me' is a key insight.