Just 4 Things (Sora release & the big Promise)

Sora drops, a studio makes a million dollar promise, and some jello-like stuff

Welcome to another list-like column. Someone suggested the name ‘mellow Jello’ for these columns. And yes, if the blog is normally Intelligent Jello, then these list pieces are a mellower jello. Perhaps no less intelligent, just easier to digest.

I’m writing a longer piece about training video models, but I learned I had several facts incorrect about the process that require a rewrite. That will mark a return to jello of a more intelligent nature.

In this column I discuss:

Sora’s Debut

Whether video models are actually for filmmaking

A million dollar promise from an AI film studio

Literal intelligent jello

A very good AI film

What AI desktop agents might mean for 2025

Sora’s Debut

The amazing Sora has been released to the public. Anyone can now use OpenAI’s video model Sora.

Is it amazing?

Yes.

But so are the other video models.

When Sora was first demonstrated by OpenAI’s court magicians in Feb 2024 the general public was astonished. The videos were much better than anything other video models on the market could generate.

Since then Runway, Luma, Pika, and Chinese companies like MiniMax (Kling) and Kuaishou (Hailuo) have released competitive models.

Now much breath is wasted arguing over the best model. Benchmarks are established. Side-by-side comparisons are shared. Whoever gets the lead only has it for a few months. Sometimes mere weeks. The Model Wars rage on.

This week the online conversation has been whether Sora 'flopped’.

But it increasingly feels like there won’t be a best model. Video models will likely become a commodity as they all reach a similar threshold. High quality video generation will likely exist everywhere— in instagram, in Youtube, in Adobe video editing, in your iPhone! Even non-video apps like Spotify might have a video model around to generate visuals.

In my opinion, claims that Sora ‘flopped’ are exaggerated. They stem from people’s disappointment that Sora did not crush the other models. And that’s true. Sora does not have categorically better image generation. But it’s still faster than other models and has a better interface.

Sora’s outputs are great. But you quickly realize that workflow, ancillary tools, and your own flexibility are much more important than the model itself. There’s no single magic mega model. And there’s unlikely to be one soon.

At this moment, I should divulge to the reader that I was part of the Sora early testing program. I’ve been using it (to little avail) for a while now.

I’ve personally found the most fun exercise is seeing what Sora ‘thinks’ of ideas or phrases. Dragging the model to my own prewritten script was typically un-fun, both for me and for the model. Poking it in strange places was fun.

Sora is a dreamer all the way. It does not like following directions.

And that may be fine.

What’s the point of video models?

I’m increasingly wondering if the primary use case of video models may not be making narrative films. In fact, filmmaking might be selling these models short.

My sense is that many AI companies are thinking this way. Especially ones like OpenAI.

What exactly do I mean?

Large Language Models (LLMs) can read and write text but no one considers ChatGPT a poem-writing tool or an email-writing tool. Rather an LLM’s understanding of language induces a general understanding of the world— it can reason, problem-solve, give advice, psychoanalyze you, as well as write poems and emails. All because it understands language.

A similar, less articulated hope lies in video models. It’s unclear what powers may emerge from watching enough video.

Right now filmmaking is the sexy use case that grabs attention— make movies with AI! But increasingly I suspect there will be specialized filmmaking models and less specialized ones.

For example, RunwayML (the company with the most filmmaking features built into its product) has expressed its north star is making feature films. OpenAI’s North Star is AGI.

Keep that in mind whenever either company talks.

A million dollar Promise

Dave Clark and his super friends have substantiated an AI film studio. And it has millions of venture capital backing. The studio is called simply “Promise”.

What is an AI studio? That’s a very good question. It’s a studio that uses generative AI technology and bespoke workflows to create media faster and cheaper. And maybe better. But probably just faster and cheaper.

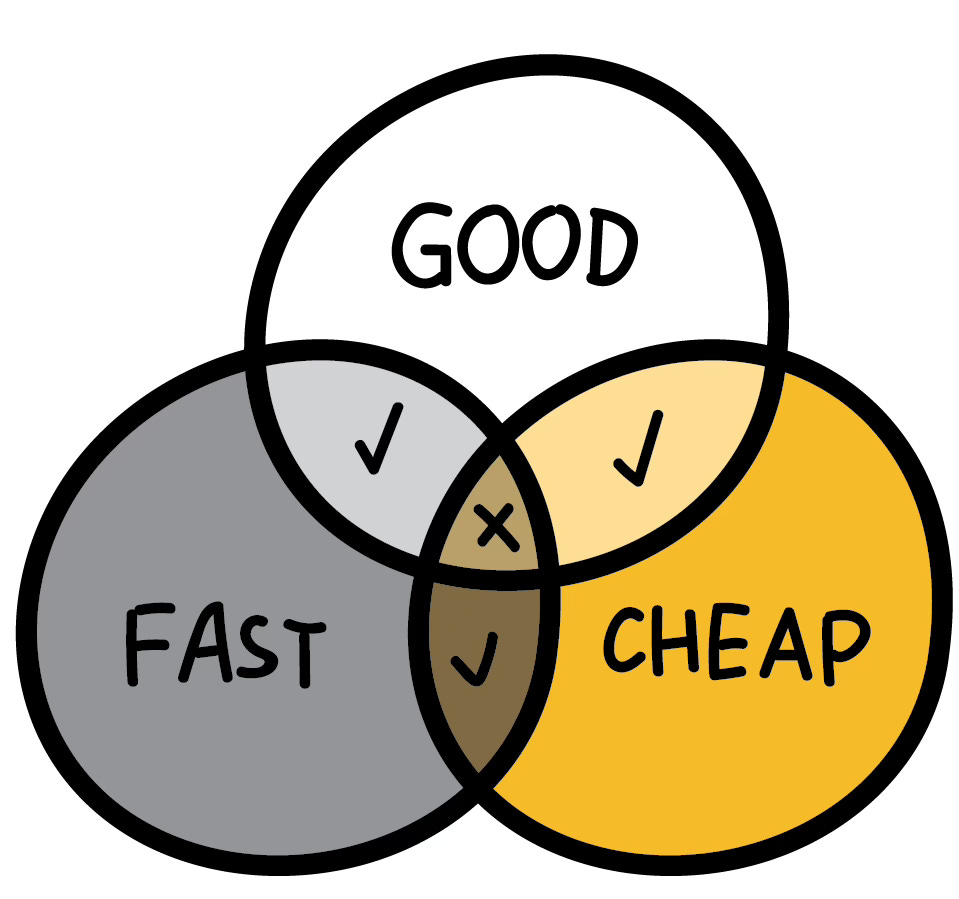

There’s a famous triangle in filmmaking. Fast. Cheap. Good. The rule is you can only have two. Whenever you pick three, you end up in trouble.

My impression is many AI studios want all three.

The logic will go something like “If we can do stuff faster and do it cheaper, we’ll have more money and more time to do it better too.”

Now Promise is not the first AI studio. But they are one of the first to raise millions of venture capital.

AI studios are great ideas. They will indeed do things faster and cheaper. They’re also easy to launch and the technology is publicly available. So it’s unclear how an AI studio differentiates itself enough to warrant venture capital.

Promise has an impressive a founding team. Dave Clark is one of the two most prominent AI filmmakers. Promise must also have a compelling pitch for why they’re different.

Here are two quotes from their website’s mission statement-y thing:

Promise will produce original films, series, and new formats in collaboration with the world's best Gen AI artists and storytellers.

[…]

We are making a significant investment in new technology. Our production workflow software MUSE will integrate the latest Generative AI tools throughout the creative process in a streamlined, collaborative, and secure production environment.

They appear to be building proprietary technology. Maybe something like Pixar? Maybe just workflows?

Who knows.

As one friend told me, “I’ll wait until I see their first promise”.

Mookoo at Sloomoo

The Sloomoo Institute in Los Angeles, a slime-themed museum, had a generative AI media event last week. Slime and gelatin, while not the same, are close relatives. So it felt like a must-cover event for Intelligent Jello.

Sloomoo is a slime-name. If you want your own ‘slime name’ replace all the vowels with ‘oo’. So Mike becomes Mookoo, slime becomes Sloomoo, and AI just Oooo. All three feel right.

The event had literal intelligent jello. AI filmmaker Louie Pecan made a 3D video controlled by a slime interface. A screen showed an animated environment. You could move the camera by sticking your hands in slime tubs that were hooked to various controls.

I had never thought of controlling generative AI with slime before. For now I prefer a mouse and keyboard. But it makes you wonder about the novel options we have ahead. The obvious one to me is voice. I think voice-interfaces will become very popular in 2025. Slime is a less obvious interface, but still a contender.

The event was organized by Machine Cinema (an LA event group I recommend checking out) and Backlot AI (an AI consulting group). Sloomoo stood out to me because the non-narrative applications of AI video. Generative video models have many applications most of which will not be narrative filmmaking.

Filmmakers tend to ask, “How can I make my movie with AI?” It’s our bias in Los Angeles and a good question to ask. However, in practical terms most of the media created with this technology will not look like traditional narrative forms. It’s important to remember that.

A Very Good Film

But we still are getting plenty of narrative films. Especially with the Sora release. Here’s a 4-minute video about the origin of humans, language, and culture.

It was done by one person, KNGMKRLabs. Apparently it took about ~420 hours of generating and editing. They used largely Sora.

This film is the kind that makes you stop and think Yikes! It still falls in the category of ‘narrated lists of images’ which remains the best strategy for playing to AI’s strengths. Despite this, it sweeps you up in a story.

It’s quite remarkable. A good storyteller can do quite a lot by themselves now.

We’re in very interesting territory.

And one more thing

As I mentioned before, I’ve added optional paid subscriptions to my substack. Substantial stuff will always be free. But a small prize will be below for jello addicts.

In this case, it’s about Desktop Agents and my gut guess about them in 2025.

Desktop Agents

Keep reading with a 7-day free trial

Subscribe to Intelligent Jello to keep reading this post and get 7 days of free access to the full post archives.